Coordination Patterns in MPI: Basic message passing¶

The examples in this section illustrate the fundamental pattern in software for distributed systems and systems where individual processes, not threads, are coordinating by communication with each other via message passing. Now we get to see the message passing part of the of name MPI (Message Passing Interface)! The portion of the patterns diagram pertaining to these examples is shown on the left.

04. Message passing deadlock, using Send-Receive of a single value¶

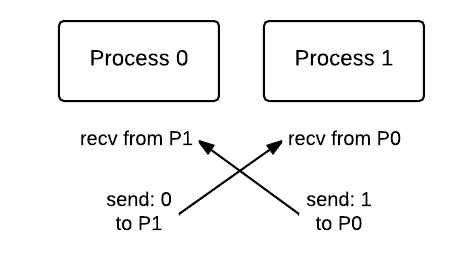

This example shows the pattern of sending and receiving messages between various processes. The code displays 2-way communication between pairs with message passing of an integer occurring between pairs of odd and even id processes.

Note

The id of a process is sometimes called a rank, so we use these terms interchangeably here.

An integer value will be sent and received by the following sender-receiver pairs:

(rank 0, rank 1), (rank 2, rank 3), (rank 4, rank 5), … ,

On lines 18 - 20 in the code below, each odd process (1, 3, 5, …) will both receive from and send a message to the process whose id is one less than it (its neighbor to the left in the pairs shown above). The message that is being passed is the rank of the current process (set on line 16). On lines 23 -25, each even process (0, 2, 4, …) is receiving from and sending to the process whose id is one greater than it (its neighbor to the right).

To do before you go on:

Find documentation for the MPI functions MPI_Recv and MPI_Send. Make sure that you know what each parameter is for. Note that you could also look up MPI_Datatype, which is an enumeration that is used to indicate the type of the data being sent. The argument MPI_INT is one of these.

Conceptually, the running code is executing like this for 2 processes, where time is moving from top to bottom:

Look at the code belwo for the send and receive being executed by odd and even process ids.

To do:

What happens when you run this code with 2 or more processes?

Can you explain why this program deadlocks and how we might avoid this situation?

Note

We introduce this problematic example because it is a bug that we can accidentally introduce into our code. The next example provides the solution to this problem.

05. Message passing 1, using Send-Receive of a single value¶

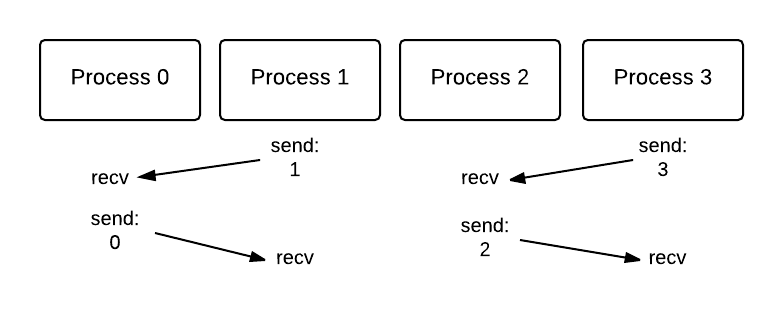

The previous example highlights how a deadlock might occur from message passing. Next we will show one possible solution for fixing this problem. We can avoid a deadlock by simply reversing the order of one of the receive/send pairs. Now, we have one receive/send ordering and one send/receive ordering for each process in the pair sharing data. Note this in the code example further below. As shown in the following diagram, where time is moving from top to bottom, even processes are receive/send pairs and odd processes are send/receive pairs.

To do:

Run using 4, 6, 8 and 10 processes by changing the -np flag. Note that the program now completes without deadlocking. Why does reversing one of the receive/send pairs allow us to avoid the deadlock situation all together?

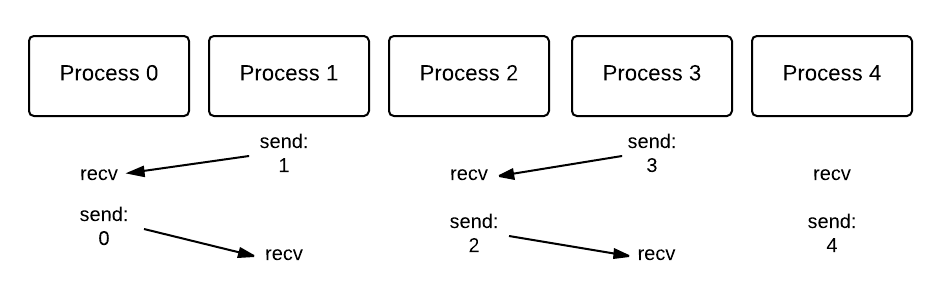

Run using 5 processes. What process threw an error and why was an error thrown? Hint: See diagram below.

Note

This solution avoids the deadlock by ensuring that when using an even number of processes, one of the processes in the pair will send its value to the neighbor process who is blocked waiting to receive the message being sent. Then it is ready to send its own message to its partner in the pair. This is a common pattern that occurs in MPI programs when pairs of processes need to exchange information.

Yet we still can have errors when code designed for an even number of processes is run with an odd number. Can you determine how you might fix this in the code above?

06. Message passing 2, using Send-Receive of an array of values¶

The messages sent and received by processes can be of types other than integers. Here the message that is being passed is a string (array of chars). This example follows the previous message passing examples in that it passes strings between pairs of odd and even rank processes.

We use dynamic memory allocation for the sendString and receivedString. Dynamic memory allocation lets a program obtain more memory space while running or release memory space if it is not needed. We can use this type of memory allocation to manually handle memory space. The function malloc on lines 28 and 29 allocates a block of SIZE bytes of memory for the sendString and receivedString.

To do:

Review documentation for the MPI functions MPI_Recv and MPI_Send; make sure you understand what the second parameter is for in each case and why the code above is written as it is.

What is the free function doing in this code? Why must we apply the free function to both sendString and receivedString?

Run with 2, 4, and 8 processes. Trace the code and match with the results returned.

07. Message passing 3, using Send-Receive with conductor-worker pattern¶

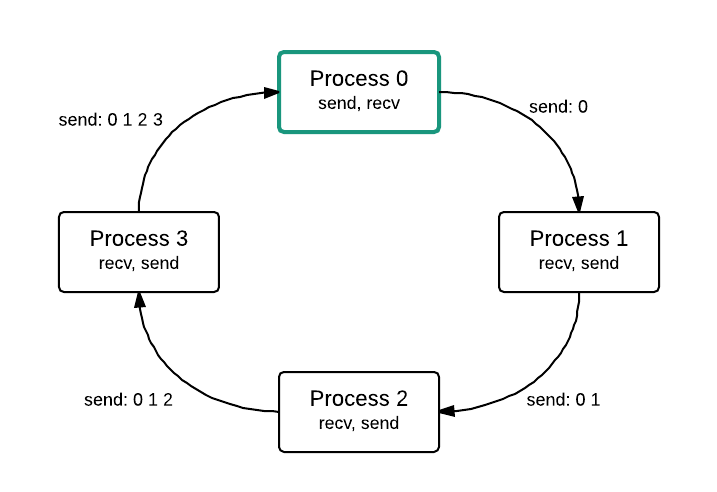

Sending and receiving often occurs in pairs. We will investigate a scenario in which this is not the case. Suppose we have four processes, 0 through 3, all of which are arranged in a “ring”. We want each process to communicate a modified string containing sequential ranks to the next process. Process 0 begins by sending its rank to process 1. Process 1 receives a string containing a 0. Next, process 1 adds its rank to the string and sends the string to process 2. Then, process 2 receives the string containing 0 and 1, and so on. This continues until process 0 receives the final string from the last process (process with the largest rank). Thus, process 0 is the beginning and ending location of the “ring”. This type of circular dependency can be thought of like this:

To do:

Run the program, varying the value of the -np argument from 1-8.

Explain the behavior you observe. Is there an ordering preserved?

Note what if statement indicates the conductor-worker pattern.

In our example, when will this communication pattern fail to execute properly and finish? What was added to check whether we can guarantee completion?

Note

Examine the output of this code, noticing how each process sends the results of its ‘work’ to the next process. This code forms the basis of what is called the pipeline pattern. You might want to do some research about when this can be useful. Though they don’t in this example, imagine that the processes could overlap by having each process ‘stream’ partially completed parts of its work to the next process in line for further processing.

Continue on to see more interesting patterns of collective communication using message passing.