2.3 Splitting loops: the parallel for pattern¶

Most programs have loops, and many programs use loops to calculate an approximation, such as an integral, or to work on individual elements of a lark array of data. At the core of every loop is each iteration. Arguably the most common pattern in parallel computing with MPI is the parallel for loop pattern, where iterations of a loop are split between processes, each of which computes a portion of the loop simultaneously.

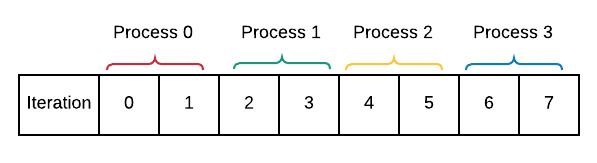

Run this code and envision that this is how the loop of 8 repetitions is being split by 4 processes:

Exercises:

Compare source the code to the output from running it.

Run, using these numbers of processes, -np: 1, 2, 4, and 8

Change REPS to 16, save, rerun, varying -np again.

Explain how this pattern divides the iterations of the loop among the processes.

The last exercise above is important: be sure that you can envision and explain how the loop was divided up. We call this decomposing the problem into smaller parts that each process can complete independently. This is the basis of the parallel for loop pattern.

In the next section we will combine what we have seen so far to solve a simple application.