7.0 OpenACC : A new standard and compiler¶

The software libraries and compilers that we have used so far, OpenMP and MPI, are at their core standards. These standards are written to dictate what functionality the compiler and the associated libraries should provide. We have used gcc and g++ for compiling C and C++ code. These compilers conform to the OpenMP standard. The OpenMP standard has several versions, and each successive version of gcc and g++ adds most of the features of the new version of the standard. There are other compilers for C and C++ that also follow the OpenMP standard. MPI libraries and compilers and associated programs like mpirun are also defined in a standard. There are two primary versions of MPI that we can install: MPICH and OpenMPI. These can be installed on clusters of machines ranging from Raspberry Pis to supercomputers, and also on single multicore servers.

These two standards, OpenMP for shared memory CPU computing, and MPI for distributed networked systems or single machines, each work with traditional multicore CPU computers. They can give us reasonable scalability to perform better and work on larger problems.

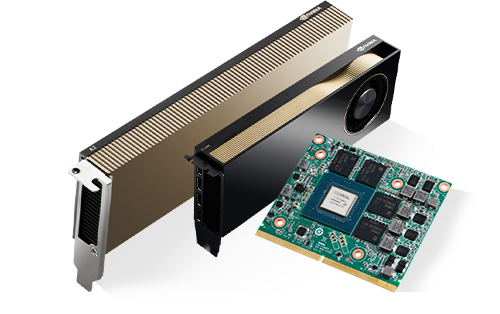

Yet many computational problems need much larger scalability. The field of supercomputing exists to develop hardware and software to handle these scalability needs. One aspect of providing more scalability is to turn to special devices that can be installed along with a multicore CPU. Graphics processing units, or GPUs, are such a type of accelerator. Today GPUS come in all sorts of sizes, from small ones for mobile devices and laptops to large ones that are separate cards containing thousands of small cores that are slower than a typical CPU chip.

Because GPUs are special hardware with thousands of cores, using them for parallelism is often called manycore computing. They were first designed to off load graphics computations to speed up response time for applications such as games or visualizations. Now they are used for speeding up general computations as well.

In the PDC for Beginners book we introduced GPU computing with NVIDIA CUDA programming in chapters 4 and 5. If you are not familiar with CUDA, you might want to start there. This will give you a sense of how blocks of large numbers of threads can be set up on NVIDIA GPU devices using the CUDA programming model. However, you should be able to start from this chapter and also get a good feel for how we can bypass the CUDA programming model and use pragmas instead.

Another important aspect of using separate GPU devices is memory management: data needed for computation on the card must be moved from the host machine to the GPU device and back again. You should be aware of this as you explore these examples, because the time taken for data movement has ramifications for the overall scalability of a GPU solution.

We will be using a specific new compiler called pgcc, which employs a different standard called OpenACC. The ACC part of OpenACC stands for accelerator. Separate cards such as GPUs are often referred to as accelerators when they are used for accelerating certain types of computations. The pgcc compiler is one of several compilers written originally by the Portland Group (PGI), which is now owned by NVIDIA, the major GPU manufacturer and inventor of the CUDA language compilers for running code on GPUS. Note that NVIDIA has renamed this compiler nvc. They also offer a C++ compiler for OpenACC called nvc++, as well as a fortran compiler (nvfortran).

A new compiler¶

The pgcc/nvc compiler will process code with pragma lines similar to those of OpenMP into different versions of code:

regular sequential version (when no pragmas present)

shared memory version using OpenMP (backwards compatible with OpenMP)

multicore version for the CPU (new pragmas defined in OpenACC standard)

manycore version for the GPU (additional pragmas for accelerator computation)

As you will see, the OpenACC standard and the PGI/NVIDIA compilers are designed to enable software developers the ability to write one program, or begin with one sequential program or OpenMP program, and easily transform it to a program that can run a larger sized problem faster by using thousands of threads on a GPU device.

Vector Addition as a basic example¶

The example we will start with is the simplest linear algebra example: adding two vectors together. We will examine several code versions of this that will demonstrate how the new pgcc openACC compiler can create the different versions of code mentioned above:

Book Section |

Code folder |

Description |

|---|---|---|

7.1 |

1-sequential |

2 sequential versions, compiled with gcc and pgcc |

7.2 |

2-openMP |

2 openMP pragma versions, compiled with gcc and pgcc |

7.3 |

3-openacc-multicore |

1 openACC pragma version using just the multicore CPU, compiled with pgcc |

7.4 |

4-openacc-gpu |

1 openACC pragma version for the GPU device, compiled with pgcc |

The code examples in these sections of the book compile and run on a remote machine and display results here in your browser.

If you want to try the code on your own machine, the code for these examples is in a GitHub repo for the CSInParallel Project. The code is inside a folder called IntermediateOpenACC/OpenACC-basics. When you see filename paths for code shown in this chapter such as in the Code folder column above, they are in relation to the IntermediateOpenACC/OpenACC-basics/vector-add folder path.

All the code examples have a Makefile in each subfolder.