Barrier Synchronization and Timing MPI Code¶

16. The Barrier Synchronization Pattern¶

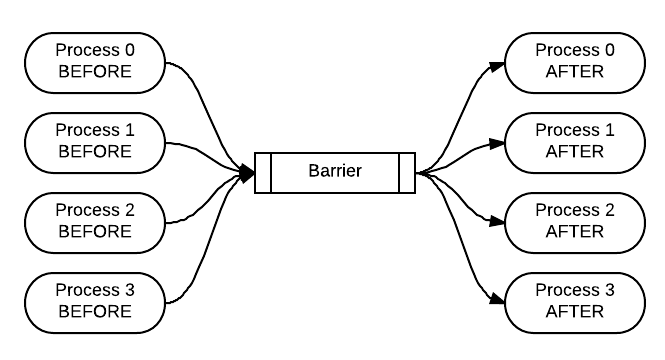

A barrier is used when you want all the processes to complete a portion of code before continuing. Use this exercise to verify that it is occurring when you add the call to the MPI_Barrier function. After adding the barrier call, the BEFORE strings should all be printed prior to all of the AFTER strings. You can visualize the execution of the program with the barrier function like this, with time moving from left to right:

To do:

Run the program several times, noting the interleaved outputs.

Uncomment the MPI_Barrier() call; then rerun, noting how the output changes.

Explain what effect MPI_Barrier() has on process behavior.

17. Timing code using the Barrier Coordination Pattern¶

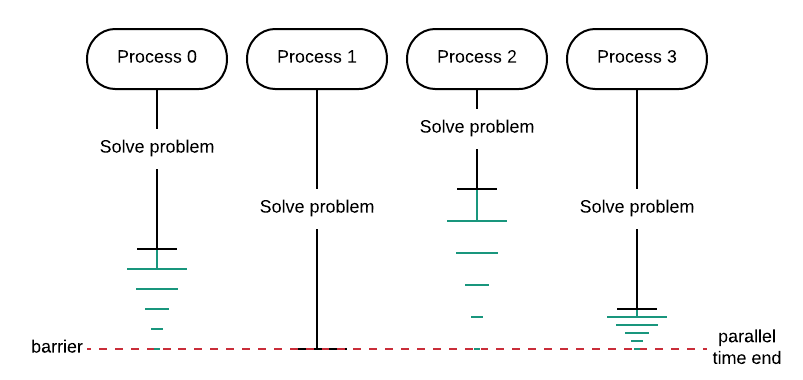

The primary purpose of this exercise is to illustrate that one of the most practical uses of a barrier is to ensure that you are getting legitimate timings for your code examples. By using a barrier, you ensure that all processes have finished before recording the time using the conductor process. If a process finishes before all processes have completed their portion, the process must wait as indicated in green in the diagram below. Thus, the parallel execution time is the time it took the longest process to finish.

In the following code, note how we have artificially made the time for each process different.

To do:

Run with and without the barrier function call commented out.

Run the code several times and determine the average, median, and minimum execution time when the code has a barrier and when it does not. You could use a spreadsheet for this.

Without the barrier, what process is being timed?

18. Timing code using the Reduction pattern¶

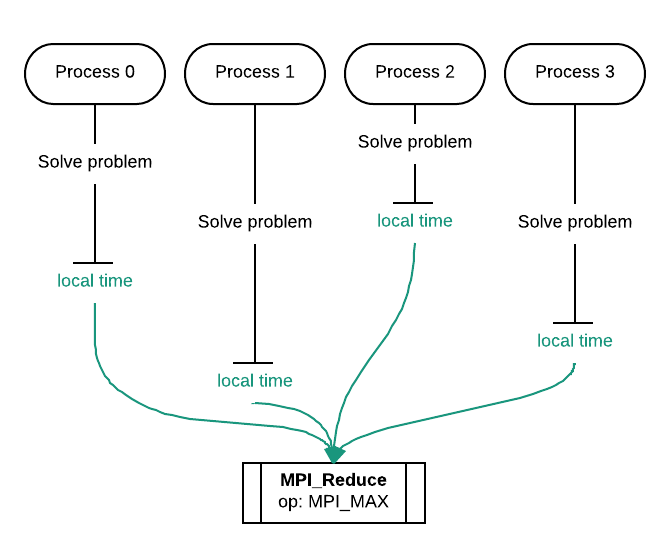

We can also use reduction for obtaining the parallel execution time of a program. In this example, each process individually records how long it took to finish. Each of these local times is then reduced to a single time using the max operator. This allows us to find the largest local time from all processes.

To do:

Run the program five times

In a spreadsheet, compute the average, median, and minimum of the five times.

Explain behavior of MPI_Reduce() in terms of localTime and totalTime.

Compare results to results from previous barrier+timing