Patterns used when threads share data values¶

9. Shared Data Algorithm Strategy: Parallel-for-loop pattern needs non-shared, private variables¶

In this example, you will try a parallel for loop where additional variables (i, j in the code) cannot be shared by all the threads, but must instead be private to each thread, which means that each thread has its own copy of that variable. In this case, the outer loop is being split into chunks and given to each thread, but the inner loop is being executed by each thread for each of the elements in its chunk. The loop counting variables must be maintained separately by each thread. Because they were initially declared outside the loops at the beginning of the program, by default these variables are shared by all the threads.

Note

Loop counter variables cannot be shared. This is because of the nature of a for loop: each thread is updating the counter variable (i,j in this case). If we had a singly nested loop with one loop index, i, with the pragma line just above the for loop in the code, OpenMP by default makes the index i private for a single nested loop. However, for the doubly nested loop, this is not the case- we need to explicitly state that the variables should be private.

Also note that by default all non-loop-counter variables are shared. This means that the 2D array m above was automatically shared by all threads forked in this loop.

10. Helpful OpenMP programming practice: Parallel for loop without default sharing¶

The above situation can be confusing. When you are trying to determine whether a data value should be shared or private inside a block of code used by forked threads, it can be tricky to notice all the variables used in the block that OpenMP has by default made shared and which ones were made private. So a helpful programming practice to ensure that you properly set all variables shared or private is to tell the openMP compiler to refrain from defaulting any variables to be private or shared. Then you find them all and set them yourself properly. This next example shows you that. Note the phrase added on line 26. Note the error that the compiler provides when you first run it and what happens when you uncomment line 28.

Also note the helpful way that we can extend the pragma line onto separate lines for readability of our code by ending each line with a (backslash) character (above enter and below backspace on most qwerty keyboards).

Note

Using the default(none) in a pragma and choosing which variables should be private and which should be shared is a really helpful practice for ensuring that your code is correct, because you have to decide and think about what variables should be shared and which should not.

11. Reduction without default sharing¶

Warning

When you use a reduction clause, that variable is by default private and does not need to be inside a private clause. This will likely trip you up until you get used to it. If you want to practice, let’s revisit the example 8 on the previous page. We’ve added the default(none) clause. Work on getting the private and shared clauses correct. Note what happens if you try to add sum to the private clause.

Note

Where did you decide the variable n should go- private or shared? An interesting compiler error occurs when you make that variable shared and haven’t initialized it in the function. With most compilers it is safe to make a variable that is only being read by each thread, such as n in this case, a shared variable.

12. Race Condition: missing the mutual exclusion coordination pattern¶

When a variable must be shared by all the threads, as in this example below, an issue called a race condition can occur when the threads are updating that variable concurrently. This happens because there are multiple underlying machine instructions needed to complete the update of the memory location and each thread must execute all of them atomically before another thread does so, thus ensuring mutual exclusion between the threads when updating a shared variable. This is done using the OpenMP pragma shown in this code. To see this, follow these steps given the comments at the top under ‘Exercise’.

Compile and run 10 times; note that it always produces the correct balance: $1,000,000.00

To parallelize, uncomment A, recompile and rerun multiple times, compare results

To fix: uncomment B, recompile and rerun, compare

Note

The atomic pragma only works in certain situations. To quote the OpenMP documentation:

“The atomic construct ensures that a specific storage location is accessed atomically, rather than exposing it to the possibility of multiple, simultaneous reading and writing threads that may result in indeterminate values.”

This means that there is one statement with one variable being read or written. Refer to the openMP documentation for the details of what types of statements you can make atomic and additional clauses you may need to add to the atomic directive.

13. The Mutual Exclusion Coordination Pattern: two ways to ensure¶

Here is another way to ensure mutual exclusion in OpenMP that is more general than the atomic clause. Follow these instructions as given in the code:

Compile and run several times; note that it always produces the correct balance $1,000,000.00

Comment out A; recompile/run, and note incorrect result

To fix: uncomment B1+B2+B3, recompile and rerun, compare

Note

A critical section in shared memory parallel programming is a block of code that must be executed by one thread at a time. We have added the curly braces to make this clear. The mechanism for doing this within the OpenMP library is to provide mutual exclusion: each thread gets to run by excluding the others from running. This is an important pattern on shared memory systems for preventing race conditions on variables that must be shared by the threads.

14. Mutual Exclusion Coordination Pattern: compare performance¶

Here is an example of how to compare the performance of using the atomic pragma directive and the critical pragma directive. Note that there is a function in OpenMP that lets you obtain the current time, which enables us to determine how long it took to run a particular section of our program.

Here is the exercise to try:

Compile, run, compare times for critical vs. atomic

Note how much more costly critical is than atomic

Research: Create an expression that, when assigned to balance, critical can handle but atomic cannot

15. Mutual Exclusion Coordination Pattern: language difference¶

The following is a C++ code example to illustrate some language differences between C and C++. Try these exercises described in the code below:

Compile, run, note resulting output is correct.

Uncomment line A, rerun, note results.

Uncomment line B, rerun, note results.

Recomment line B, uncomment line C, rerun, note change in results.

Some Explanation¶

A C line like this:

printf(“Hello from thread #%d of %dn”, id, numThreads);

is a single function call that is pretty much performed atomically, so you get pretty good output like this:

Hello from thread #0 of 4

Hello from thread #2 of 4

Hello from thread #3 of 4

Hello from thread #1 of 4

By contrast, the C++ line:

cout << "Hello from thread #" << id

<< " out of " << numThreads

<< " threads.\n";

has 5 different function calls, so the outputs from these functions get interleaved within the shared stream cout as the threads ‘race’ to write to it.

The other facet that this particular patternlet shows is that OpenMP’s atomic directive will not fix this – it is too complex for atomic, so the compiler flags that as an error. To make this statement execute indivisibly, you need to use the critical directive, providing a pretty simple case where critical works and atomic does not.

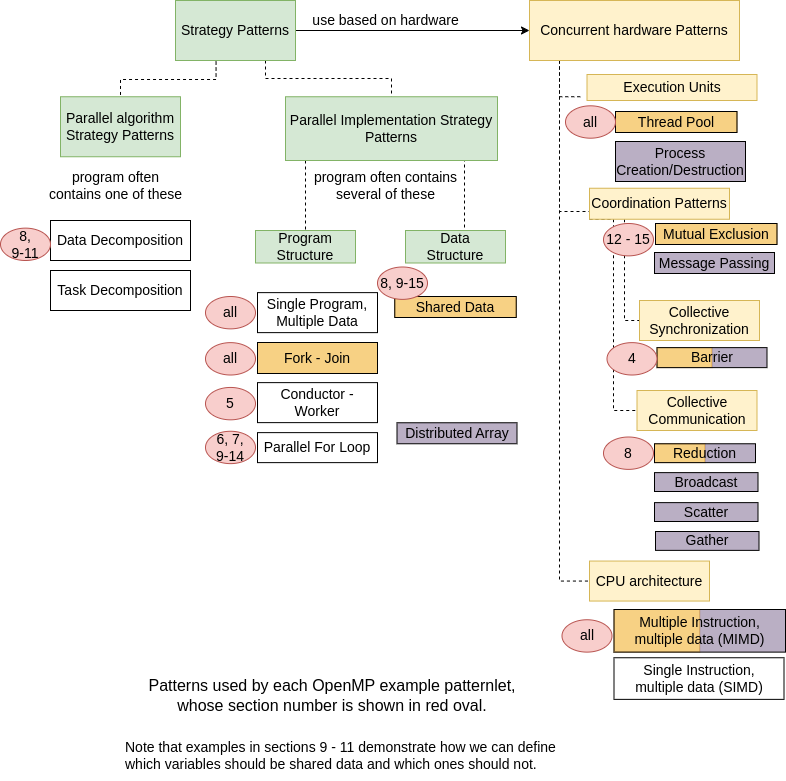

Summary Overview¶

We continue to fill in what patterns from our diagram appear in each of the examples, 9 -15 on this page, which present the various ways that we can provide mutual exclusion of shared data. We also started with examples of how to declare certain data private to each thread, meaning each thread gets its own copy of the data when it forks.

At this point, you may want to review the above examples in this section to be certain why some use data decomposition and some do not.