3.2. Parallel for Loop Program Structure: chunks of 1¶

Navigate to: ../03.parallelLoop-chunksOf1/

Example usage:

make

mpirun -hostfile ~/hostfile -np N ./parallelLoopChunksOf1

Here the N signifies the number of processes to start up in MPI.

Exercises:

Run, using these numbers of processes, N: 1, 2, 4, and 8.

Compare source code to output.

Change REPS to 16, save, rerun, varying N again.

Explain how this pattern divides the iterations of the loop among the processes.

- The processes are assigned single iterations in order of their process number.

- The processes are assigned single iterations in order of their process number.

- In this code, single iterations, or chunks of size 1 repetition are assigned consecutively to each process.

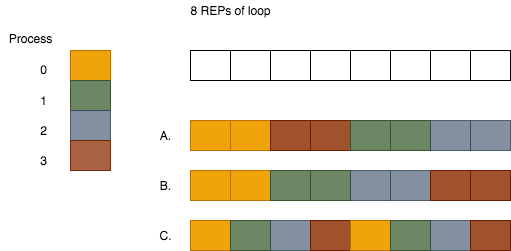

Q-1: Which of the following is the correct assignment of loop iterations to processes for this code, when REPS is 8 and numProcesses is 4?

3.2.1. Explore the code¶

In the code below, we again use the variable called REPS for the total amount or work, or repetitions, that the for loop is accomplishing. This particular code is designed so that the number of repetitions should be more than or equal to the number of processes requested. .. note:: Typically in real problems, the number of repetitions is much higher than the number of processes. We keep it small here to illustrate what is happening.

Like the last example all processes execute the code in the part of the if statement that evaluates to True. Note that in the for loop in this case we simply have process whose id is 0 start at iteration 0, then skip to 0 + numProcesses for its next iteration, and so on. Similarly, process 1 starts at iteration 1, skipping next to 1+ numProcesses, and continuing until REPs is reached. Each process performs similar single ‘slices’ or ‘chunks of size 1’ of the whole loop.

#include <stdio.h> // printf()

#include <mpi.h> // MPI

int main(int argc, char** argv) {

const int REPS = 8;

int id = -1, numProcesses = -1, i = -1;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &id);

MPI_Comm_size(MPI_COMM_WORLD, &numProcesses);

if (numProcesses > REPS) {

if (id == 0) {

printf("Please run with -np less than or equal to %d\n.", REPS);

}

} else {

for (i = id; i < REPS; i += numProcesses) {

printf("Process %d is performing iteration %d\n", id, i);

}

}

MPI_Finalize();

return 0;

}