2.1. Single Program, Multiple Data¶

The single program multiple data (SPMD) pattern is one of the most common patterns and is fundamental to the way most parallel programs are structured. The SPMD pattern forms the basis of most of the examples that follow in this tutorial.

Let’s navigated back to the Patternlets/MPI folder. Under that folder, you will find a subfolder called 00.spmd0

cd ~/CSinParallel/Patternlets/MPI/00.spmd/

To run the code in this folder, type the following series of commands:

make

mpirun -hostfile ~/hostfile -np 4 ./spmd

The make command compiles the code. The mpirun command tells MPI to run the executable on the available processors specified by hostfile.

The -np flag signifies how many processes to run. Here the 4 signifies the number of processes to start up in MPI.

Exercise:

Rerun, using varying numbers of processes, e.g. 2, 8, 16

2.1.1. Explore the code¶

Here is the heart of this program that illustrates the concept of a single program that uses multiple processes, each containing and producing its own small bit of data (in this case printing something about itself).

#include <stdio.h> // printf()

#include <mpi.h> // MPI functions

int main(int argc, char** argv) {

int id = -1, numProcesses = -1, length = -1;

char myHostName[MPI_MAX_PROCESSOR_NAME];

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &id);

MPI_Comm_size(MPI_COMM_WORLD, &numProcesses);

MPI_Get_processor_name (myHostName, &length);

printf("Greetings from process #%d of %d on %s\n",

id, numProcesses, myHostName);

MPI_Finalize();

return 0;

}

Let’s look at each line in main and the MPI constructs referenced:

The first line initializes some important variables. Since each process will run this function in parallel, each process will have a local (private) copy of these variables.

The second line initializes a character array called

myHostName, which will be used to store the hostname. This program uses theMPI_MAX_PROCESSOR_NAMEMPI environment variable, which specifies the maximum size that any processor name can be.The

MPI_Initfunction initalizes MPI. All MPI programs are required to start with a call toMPI_Init. Typically, theMPI_Initfunction takes the arguments tomainas its parameters, though this is not required.The

MPI_Comm_rankfunction gets the rank (or unique identifier) associated with the process. There are two arguments to this function:The first is the environmental variable

MPI_COMM_WORLDwhich is an example of a communicator, which specifies the set of processes that communicate with each other in the context of an MPI program. The default isMPI_COMM_WORLD, which specifies that all available processes should be used.The second argument is a pointer to the id variable. When this function finishes executing, the variable

idwill have a unique value assigned to it.

The

MPI_Comm_sizefunction takes the communicatorMPI_COMM_WORLDas its first parameter, and a pointer to thenumProcessesvariable as its second. When this function finishes executing, thenumProcessesvariable will contain the total number of processes that are running in the context of this MPI program.The

MPI_Get_processor_namefunction takes a character array as its first parameter and a length variable as its second. When this function returns, themyHostNamevariable will contain the hostname that a particular process runs on. On a distributed system, each process can have different hostnames. However, if multiple processes run on a single host, those processes will then share the same hostname.Finally, the

MPI_Finalizefunction indicates that MPI environment is no longer needed.

The above five functions are often used in every MPI program. MPI programs include MPI_Init at the beginning of the program, and MPI_Finalize near the end. The MPI_Comm_rank and MPI_Comm_size functions are often needed for writing correct programs. The MPI_Get_processor_name function is often

used for debugging and analysis of where certain computations are running.

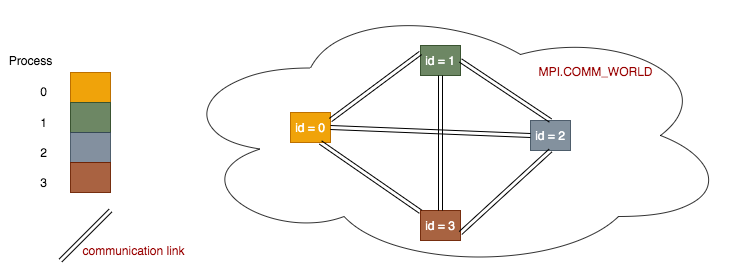

The fundamental idea of message passing programs can be illustrated like this:

Each process is set up within a communication network to be able to communicate with every other process via communication links. Each process is set up to have its own number, or id, which starts at 0.

Note

Each process holds its own copies of the above 4 data variables. So even though there is one single program, it is running multiple times in separate processes, each holding its own data values. This is the reason for the name of the pattern this code represents: single program, multiple data. The print line at the end of main() represents the multiple different data output being produced by each process.